Edge Mode: Evolved with Evolv AI

Lori Rakita, Au.D. and Jumana Harianawala, Au.D.

Background

The chaotic and complex world of hearing cannot always be accommodated by concrete, manual hearing aid memories. Starkey hearing aids have always utilized an automatic environmental classification system that serves as the foundation for an effortless listening experience. This system monitors the environment and adapts the hearing aid parameters, accordingly. This automatic adaptation works seamlessly as the hearing aid user moves from one listening environment to another. Achieving this experience not only requires precise characterization of the environment and its acoustic properties, it also requires a technologically advanced system that provides the appropriate amount of adaptation. This allows the hearing aid wearer to keep attention focused on what’s happening in the moment, and not on the hearing aids. This is an essential element of an effortless listening experience and has been incorporated into the Evolv AI hearing aids. The automatic environmental classifier, described above, can accommodate most listening situations, and relieves the hearing aid user from having to switch into manual hearing aid memories. However, some listening situations are particularly complex or particularly challenging, and require a more aggressive degree of signal processing to provide improved comfort or clarity. Edge Mode is an industry-unique, extra gear of adaptation, beyond the changes made by the automatic system, alone. Because Edge Mode is activated by the hearing aid user, the hearing aid can enable more aggressive changes, providing impactful improvements, based on assumptions about listening intent. Edge Mode works by taking a “snapshot” of the acoustic environment. This involves a detailed analysis of acoustic nuances about the sound scene. Once this snapshot is captured and Edge Mode is engaged by the listener, Edge Mode automatically optimizes for comfort or clarity, depending on the listening situation. With the double tap of the hearing aid, and now, the tap of a button in the Thrive Hearing Control app, Edge Mode adjusts gain, noise management, and directionality to optimize the environment. In the latest Evolv AI product family, Edge Mode has been updated with new parameter adaptations based on expanded data analytics to provide comfort and clarity in the most challenging listening situations. This means the hearing aid is better able to recognize and adapt to environments with unique listening demands. For example, situations with continuous, diffuse background noise are clear for the hearing aid to detect and interpret. However, environments with sporadic speech at lower volumes, such as a small cafe or restaurant, or situations with louder, low frequency, steady-state noises (e.g., the car) require the system to account for very unique acoustic considerations. Edge Mode is now finely tuned to detect the various acoustic nuances of these more complex and ambiguous listening situations. The current studies were designed to better understand the capabilities of Evolv AI Edge Mode for improving comfort, clarity, and reduced listening effort in challenging and acoustically ambiguous listening environments.

Experiment 1

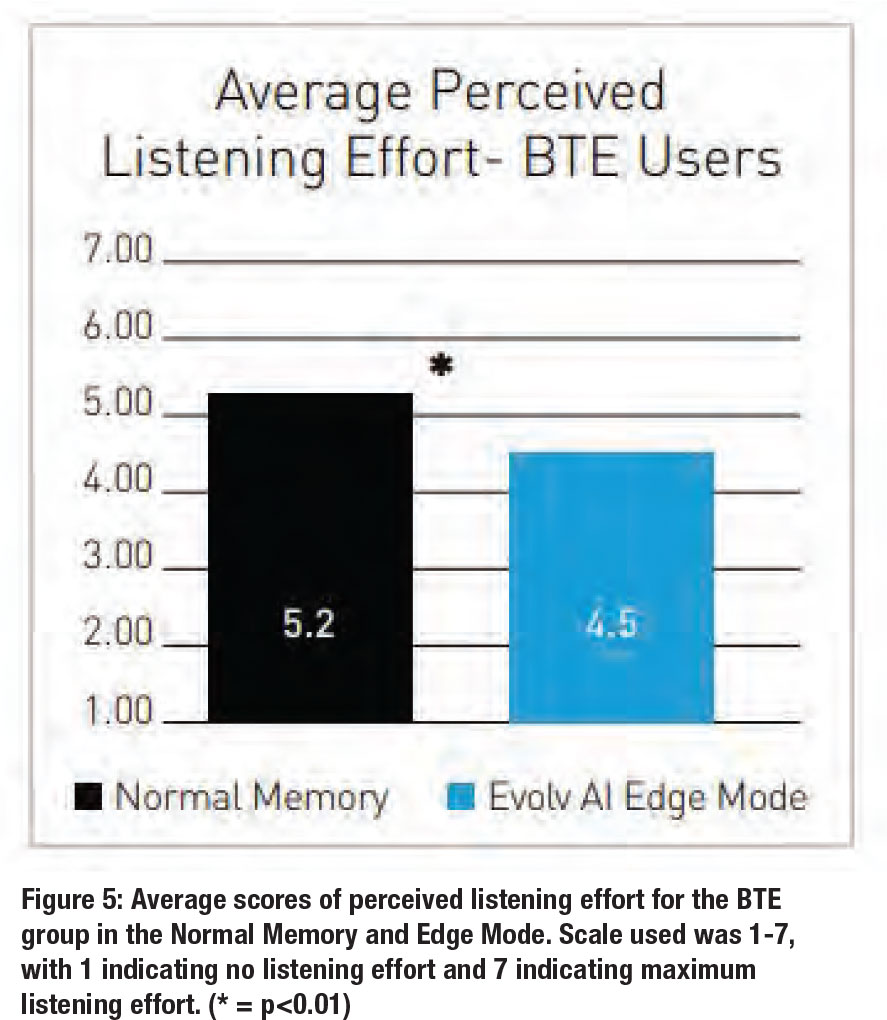

The goal of the first study was to investigate key performance differences between the automatic environmental classification memory (“Normal Memory”) and Edge Mode. Two populations were used to assess Edge Mode performance for hearing aid users: moderate hearing loss (CIC users) and severe-to-profound degrees of hearing loss (BTE users). Of specific interest was the ability of Edge Mode to provide additional benefit in speech understanding and/or perceived listening effort over what is provided by the automatic environmental classification (“Normal Memory”). Listening effort was an important aspect of this study. Listening for individuals with hearing loss is reported as more taxing (Kramer et al., 2006), and more commonly associated with fatigue and stress (Hetu et al., 1988) than for normal-hearing listeners. Therefore, it was important to capture the degree to which a hearing aid user was exerting effort to understand speech, since this is something that is not captured by the speech intelligibility score. Lower perceived listening effort is (by definition) the most important indication of an effortless listening experience.

Methods: Twenty-six participants were enrolled in the current study. Thirteen participants had moderate to moderately severe degrees of hearing loss and were fitted with the Evolv AI CIC device. Thirteen participants had severe-to-profound hearing loss and were fitted with the Evolv AI Power Plus BTE 13 device. See Figure 1 for average audiogram of the participants.

Hearing Aid Programming: The CIC devices and BTE devices were programmed to first fit (Best-Fit) to e-STAT, Starkey’s proprietary fitting formula via the Inspire X software. BTE users had either slim tubes or earhooks with traditional tubing and an earmold, depending on the degree of hearing loss. For participants with earmolds, venting was selected based on what was recommended in Inspire X software.

Hearing Aid Programming: The CIC devices and BTE devices were programmed to first fit (Best-Fit) to e-STAT, Starkey’s proprietary fitting formula via the Inspire X software. BTE users had either slim tubes or earhooks with traditional tubing and an earmold, depending on the degree of hearing loss. For participants with earmolds, venting was selected based on what was recommended in Inspire X software.

Scene for Testing: Testing was completed in a sound-treated booth. The participant was seated in the middle of an eight-speaker array, with a speaker positioned every 45 degrees from 0 degrees azimuth to 315 degrees azimuth. A scene emulating a small café or restaurant was used for testing. This scene was selected because it can be challenging for a hearing aid to interpret a scene when background noise is more variable and lower in amplitude. IEEE sentences were presented from the front speaker at zero degrees azimuth at 65 dB SPL. Multitalker babble noise was presented from all other surrounding speakers at a summated level of 60 dB SPL.

Outcome Measures: There were two primary outcome measures of interest in this study. The first was speech understanding. Participants were asked to repeat back two lists of IEEE sentences in two hearing aid conditions: Edge Mode and the Normal Memory. These conditions were counterbalanced across participants, and the participants were blinded to the condition in which they were being tested. The number of words repeated back correctly was recorded for each list of IEEE sentences, and these scores across the two lists were averaged to achieve a final score for each participant. Perceived listening effort was also captured as Figure 1: Average audiogram for research participants in Experiment 1. Red symbols represent average thresholds for the right ear, blue symbols represent average thresholds for the left ear. Edge Mode: Evolved with Evolv AI the second outcome measure in this study. After completion of the two IEEE sentence lists for each hearing aid condition, participants were asked to rate their perceived listening effort on a scale of 1 (no effort) to 7 (maximum effort).

Results

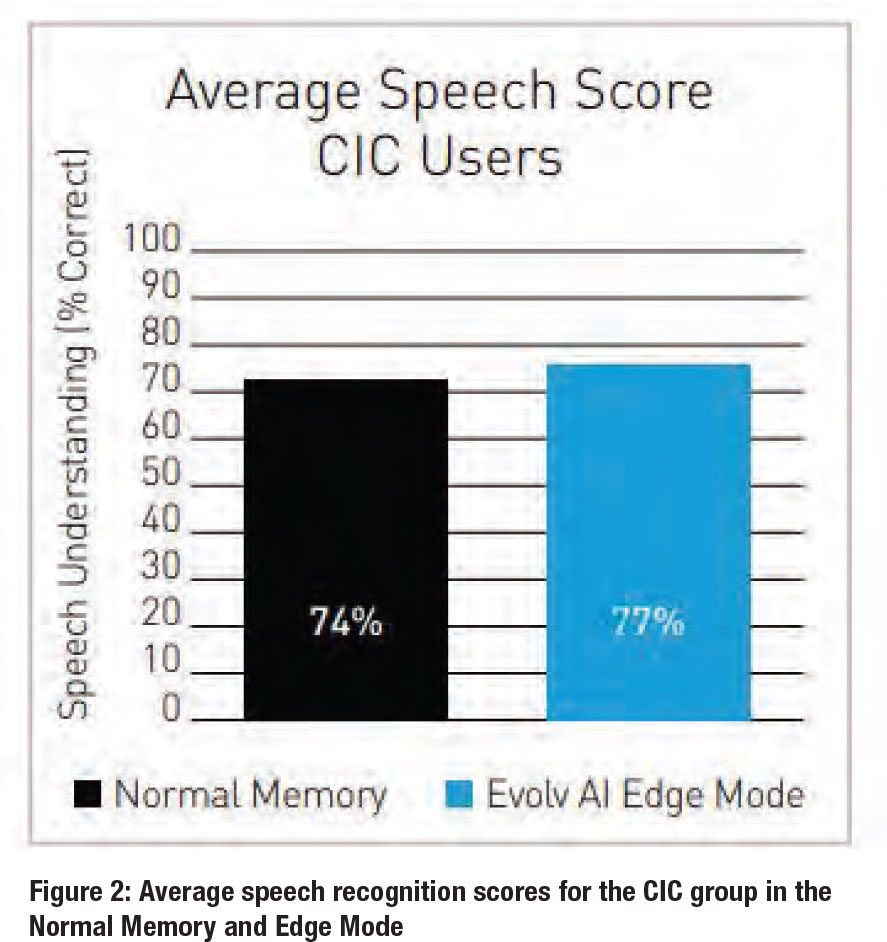

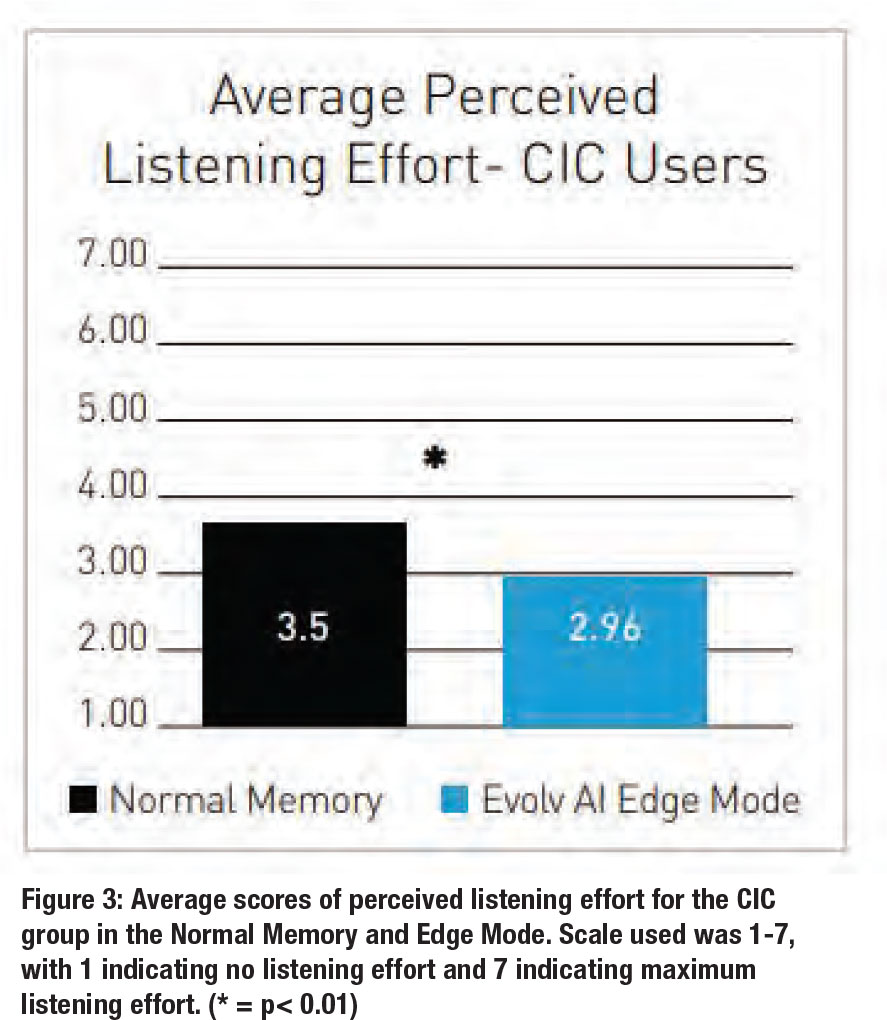

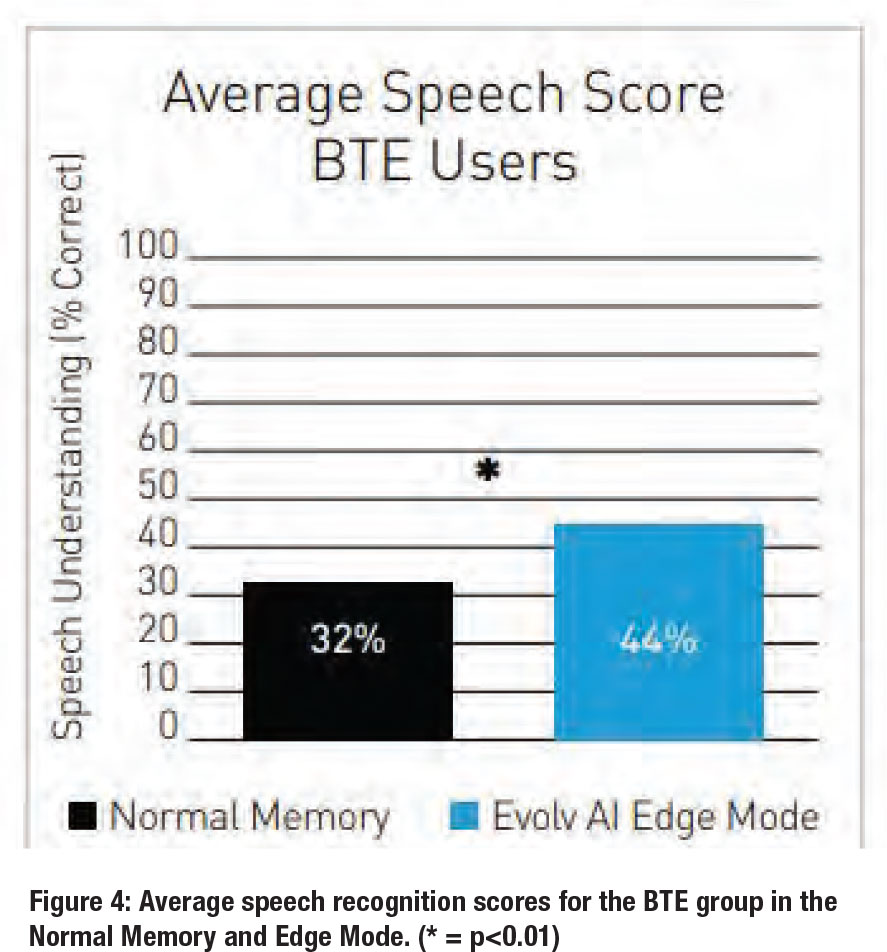

Results were averaged separately for the CIC users (Figures 2 and 3) and the BTE users (Figures 4 and 5). CIC users did not show any significant differences in speech understanding between the Normal Memory and Edge Mode but did show significant differences in perceived listening effort between the two memory conditions (p< 0.05) and significantly lower perceived listening effort with Edge Mode compared to the Normal Memory (p<0.05).

Conclusion

The current study investigated comparisons between the automatic environmental classification system, alone (“Normal Memory”), versus Edge Mode for a complex acoustic scene: a small restaurant. Because there are more isolated interferers, as opposed to diffuse or steady-state background noise, this scene is more difficult for a hearing aid to interpret and provide adaptations. The new Evolv AI hearing aid has been optimized to handle these listening environments. The results of the study showed that CIC users, overall, did not have significant difficulty with speech understanding in this scene due to the lower levels of background noise. However, when assessing perceived listening effort, CIC users did indicate the scene was effortful, and had significantly less perceived listening effort in Edge Mode compared to the Normal Memory. This is an important finding that indicates the importance of collecting data related to listening effort in addition to speech understanding results. BTE users had significantly better speech intelligibility scores with Edge Mode compared to the Normal Memory. Listening Effort was also rated lower with Edge Mode compared to the Normal Memory. This shows that for individuals with greater degrees of hearing loss, even softer noise can be extremely difficult. The addition of Edge Mode can provide the extra boost in performance and reduce listening effort for these individuals. Overall, the current study provided evidence for the impact of Edge Mode, on top of the automatic environmental classification system. The results suggest that Edge Mode is making more extreme changes, as evidenced by lower ratings of listening effort as well as improved speech understanding in complex listening environments by some listeners.

Experiment 2

Experiment 2 had three main purposes: The first was to extend beyond comparisons of speech understanding and listening effort to comparisons of preference for hearing aid users. The second goal was to explore more specific areas of comparison, including preference for speech clarity, listening comfort, and overall preference. Finally, the third goal was to investigate the degree to which Edge Mode provided benefit over a manually “optimized” dedicated hearing aid memory, as would be provided by a hearing healthcare provider for a particular problematic listening situation.

Methods: Fifteen participants were enrolled in Experiment 2. All participants had mild-to-moderately severe degrees of hearing loss and were fitted with the Evolv AI receiver-in-the-canal (RIC) devices. Average audiograms of the participants are shown in Figure 6 below.

Hearing Aid Programming: The RIC devices were programmed to first-fit (Best-Fit) to e-STAT, Starkey’s proprietary fitting formula via the Inspire X software. Devices were fit with acoustic coupling appropriate for each participant’s hearing loss. Adjustments to the fittings were made upon participants’ request. Real Ear Measurements were completed for each participant to ensure acceptable hearing aid output for 55, 65 and 75 dB SPL International Speech Test Signal (ISTS) inputs.

Hearing Aid Programming: The RIC devices were programmed to first-fit (Best-Fit) to e-STAT, Starkey’s proprietary fitting formula via the Inspire X software. Devices were fit with acoustic coupling appropriate for each participant’s hearing loss. Adjustments to the fittings were made upon participants’ request. Real Ear Measurements were completed for each participant to ensure acceptable hearing aid output for 55, 65 and 75 dB SPL International Speech Test Signal (ISTS) inputs.

Scene for Testing: A transportation scene was selected for testing due to the specific, acoustic characteristics of this scene. Transportation noise is dominated by characteristically low frequency high-level noise. This complex listening situation requires a unique strategy for both identification and adaptation. A recording of actual male speech in high-level transportation noise was played from all eight loudspeakers at a summated level of 70 -75 dB SPL. An ambisonic approach to recording and representing sound was used to not only capture the sound in the horizontal plane at the location of the side passenger but also to include the sounds and reflections from other sources and directions that make this listening environment complex and problematic.

Outcome Measures: Participants were asked to compare Edge Mode to the Normal memory and to an optimized, dedicated memory (i.e., the dedicated car memory), two at a time, in the transportation scene described above. Participants were asked to evaluate the hearing aid settings for three different judgement criteria – speech clarity, listening comfort and overall preference. Participants completed each paired comparison twice and were blind to the hearing aid conditions evaluated in each comparison.

Results

The number of preferences for each set of conditions is shown in Figures 7 and 8. Figure 7 shows the number of preferences for speech clarity, listening comfort, and overall preference between Edge Mode and the Normal Memory. The results indicate a greater number of preferences for Edge Mode over the Normal Memory regarding speech clarity and overall preference. Figure 8 shows the number of preferences for speech clarity, listening comfort, and overall preference between Edge Mode and an optimized, dedicated hearing aid memory. The results indicate a strong preference for Edge Mode over the dedicated hearing aid memory for listening comfort and overall preference.

The number of preferences for each set of conditions is shown in Figures 7 and 8. Figure 7 shows the number of preferences for speech clarity, listening comfort, and overall preference between Edge Mode and the Normal Memory. The results indicate a greater number of preferences for Edge Mode over the Normal Memory regarding speech clarity and overall preference. Figure 8 shows the number of preferences for speech clarity, listening comfort, and overall preference between Edge Mode and an optimized, dedicated hearing aid memory. The results indicate a strong preference for Edge Mode over the dedicated hearing aid memory for listening comfort and overall preference.

Conclusion

The results of Experiment 2 suggest that individuals with hearing loss show an overall preference for Edge Mode in challenging listening environments that require specific listening adaptations. Experiment 2 supports the results of Experiment 1, which demonstrated superior levels of speech understanding and perceived listening effort with Edge Mode. This study also indicates greater preference for Edge Mode over an optimized dedicated memory. These findings indicate that hearing healthcare providers can feel comfortable that Edge Mode will provide an appropriate level of adaptation that will be equivalent or even better than what can be provided by a manual hearing aid memory, without counseling or assigning manual memories. The scenes used for testing in Experiment 1 and Experiment 2 were chosen because they a) can be very difficult for hearing aid users and b) are typically ambiguous and therefore more difficult for a hearing aid to interpret. Both studies demonstrate the benefit of Edge Mode for these types of listening situations. Tapping the hearing aid or activating Edge Mode in the app will adapt the hearing aid to any listening environment, including the complex or ambiguous listening environments that are typically difficult for a hearing aid to interpret and optimize. Without having to think about specific dedicated memories, Edge Mode provides the extra gear of adaptation, beyond the changes that happen automatically, to provide comfort, clarity, and reduced listening effort for the hearing aid user. ■

The results of Experiment 2 suggest that individuals with hearing loss show an overall preference for Edge Mode in challenging listening environments that require specific listening adaptations. Experiment 2 supports the results of Experiment 1, which demonstrated superior levels of speech understanding and perceived listening effort with Edge Mode. This study also indicates greater preference for Edge Mode over an optimized dedicated memory. These findings indicate that hearing healthcare providers can feel comfortable that Edge Mode will provide an appropriate level of adaptation that will be equivalent or even better than what can be provided by a manual hearing aid memory, without counseling or assigning manual memories. The scenes used for testing in Experiment 1 and Experiment 2 were chosen because they a) can be very difficult for hearing aid users and b) are typically ambiguous and therefore more difficult for a hearing aid to interpret. Both studies demonstrate the benefit of Edge Mode for these types of listening situations. Tapping the hearing aid or activating Edge Mode in the app will adapt the hearing aid to any listening environment, including the complex or ambiguous listening environments that are typically difficult for a hearing aid to interpret and optimize. Without having to think about specific dedicated memories, Edge Mode provides the extra gear of adaptation, beyond the changes that happen automatically, to provide comfort, clarity, and reduced listening effort for the hearing aid user. ■

References

- Kramer S.E., Kapteyn T.S. & Houtgast T. 2006. Occupational performance: Comparing normally-hearing and hearing-impaired employees using the Amsterdam checklist for hearing and work. Int J Audiol, 45, 503–512.

- Hétu, R., Riverin, L., Lalande, N., Getty, L., and St-cyr, C. (1988). Qualitative analysis of the handicap associated with occupational hearing loss. Br. J. Audiol. 22, 251–264. doi: 10.3109/03005368809076462

Lori Rakita, Au.D., joined Starkey Hearing Technologies in 2021 as the Director of Clinical Research. In her current position, she is responsible for output of the Research Department and organizing efforts related to product and feature development, validation, and studies that answer key audiological questions. Prior to Starkey, Dr. Rakita led teams in industry and medical settings in research efforts related to hearing aid performance, the effectiveness of signal processing, and the needs of individuals with hearing loss.

Jumana Harianawala, Au.D., is a Senior Fitting Systems Design Engineer at Starkey Hearing Technologies in Eden Prairie, Minnesota. Jumana specializes in improving hearing aid fittings and adaptations using progressive concepts & techniques like environment momentary assessment-based tuning. Jumana also has 11 years of experience in qualitative and quantitative research investigating benefits from innovative technologies that lead to the development of new features and products.